When DevOps Dominoes Come Crashing Down

DevOps Domino Effect: It All Starts with a Change

Every service outage can ultimately be traced back to a change. Software systems don’t just start behaving differently; their behavior changes because the system’s environment or data has changed in some way, even if that change isn’t immediately evident.

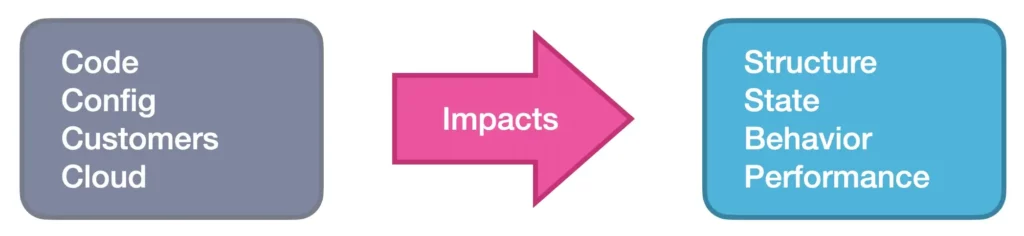

Whatever the symptom — a service becoming unavailable; an increase in HTTP status 500 errors; resource contention — all can be traced back to some change (or changes) in the system. Perhaps it’s a code change or a configuration change, or externally, a change in the customer behavior or in your cloud provider. We call these different types of changes the “Four C’s”:

The “Four C’s”

Some of those changes are far more frequent than others — in particular, code and configuration changes. We make these changes multiple times a day and, in fact, we need to make them in order to make progress with our applications. And it should come as no surprise that these changes — internal changes — are the root cause of most outages and performance degradation. In fact, a recent survey found that an overwhelming 76% of all outages can be traced back to changes in the system’s environment.

There’s no lack of concrete examples in published post-mortems:

- Cloudflare (2022): A change to network configuration (BGP policy) caused a ~1 hour outage across 50% of total requests.

- Google (2009): A change which mistakenly added “/” to the malicious sites list resulted in an undesired and confusing warning for every search result.

- Google Cloud Storage (2019): A change (made to reduce resource usage) led to a 4 hour outage — which definitely reduced usage, though not in the way Google intended.

In all of these cases, the planned change was initiated with good intentions — to improve the application functionality/performance — but led to an outcome that was temporarily worse than where these companies began.

The Search for the Change

During an outage, two threads are usually started in parallel:

- Immediate Mitigation: These are attempts to resuscitate the services: scale the infrastructure, manually restart if services are stuck, etc.

- Root Cause Analysis (RCA) and Remediation: This is the longer-term fix to prevent similar issues in the future — for example, changing the architecture of the application to avoid the fragile behavior, or setting up autoscaling for a service pool, etc.

Both of these efforts benefit from identifying the initial changes in the system — and these efforts always begin with the question: “Why?”

- Why is this incident happening right now?

- Why did an upstream service’s latency increase?

- Why did these particular alerts fire at the same time?

It’s the number of answered “whys” that make up the difference between enabling effective short-term mitigation and implementing a more permanent fix.

To answer each “why”, we look at what has changed that could contribute to the observed behavior, working backwards one step at a time. Each answer leads to more questions as we form, confirm or reject the hypotheses of what is happening. And with each answer, with each connected change, we get closer to the root cause(s) of an issue.

Unfortunately, finding changes in the system is a slow and manual process that spans multiple systems of record, and relies (strongly) on expert knowledge of the application architecture and previous behavior. The types of potential changes include code commits, CI/CD pipeline runs, Kubernetes deployment changes, docker images used, configuration changes, even certificate expirations. And the traces of these changes are scattered all over the place — in Slack, Jenkins, AWS EventBridge, random log files — if they were captured at all.

Combine this fragmented reality with siloed observability tools [6], and the result is that root causing the issue takes a significant chunk of time (66%!) in the process of fixing an outage [7]. In the typical incident flow of detect, diagnose, and resolve, the diagnose step dominates. In fact, you’ll see a common sentiment across post-mortems: “Once we found the root cause, the fix immediately followed”:

- PagerDuty 2021: “We have identified the current behaviour as related to a DNS issue and are rolling out a fix.”

- Cloudflare 2020: “Once the outage was understood, the Atlanta router was disabled and traffic began flowing normally again.”

- Heroku 2017: “Eventually, our engineers were able to work backward from our internal logging and trace the issue … With this confirmation, our engineers corrected the instance’s configuration, resulting in resolution of impact. “

Change Impact: Stopping DevOps Domino Effect

Understanding the effects of a change is critical for early detection and prevention of service issues, stopping the DevOps domino effect and minimizing the frequency and duration of outages.

But, beyond the metrics, understanding the impact of a change also provides peace of mind.

Change is constant, and so is the fear of change; what’s true for life in general is just as true for software development. And if we don’t understand the impact of a change — its effects — it can feel like we’re rolling the dice.

For example, consider the 10-hour outage suffered by DataDog back in September 2020. The root cause was a change that was implemented more than a month before the outage and had no immediate effects. But the risk was left dormant, waiting for just the right ingredients to mix together:

Late August, as part of a migration of that large intake cluster, we applied a set of changes to its configuration, including a faulty one.

…The local DNS resolver did answer more queries but it did not change any intake service level indicator that otherwise would have had us trigger an immediate investigation

…With this change in place and its impact missed during the change review, the conditions were set for an unforeseen failure starting with a routine operation a month later.

Understanding the impacts of a change shouldn’t be a reactive process, happening minutes or hours or months after the change. We shouldn’t wait for an SLO alert to fire in order to launch our investigation of what could have gone wrong. An engineer — the change author — should be able to proactively monitor and understand the effects of their changes as those effects are happening, not after they’ve caused a cascade of unintended consequences. This obviously helps in production — but also earlier, in the review process itself, to determine whether the change should be promoted to staging or production at all.

Imagine implementing a change, and watching it propagate through your system, and observing its intended effects, and validating that there were no adverse consequences. At the end, you’d get a nice summary of what had been affected, and all the major effects your change had led to, blast radius and otherwise. You could check that all was going well, and that the end result matched your expectations at every step.

Then, with full peace of mind, you could close your laptop for the day.

If this has piqued your interest, sign up for our beta and see change impact in action on CtrlStack.